On January 27th 2026, Moonshot AI set a new benchmark with the release of its most powerful open-source model to date: Kimi K2.5.

Building upon its predecessor, Kimi K2.5 is not merely a language model; it is a native multimodal powerhouse featuring Visual-to-Code Generation, a revolutionary Agent Swarm execution paradigm, and state-of-the-art performance across key AI domains.

This article provides an in-depth analysis of Kimi K2.5's core features, its technical breakthroughs, and how it is poised to redefine real-world AI applications for developers and enterprises.

What is Kimi K2.5?

Kimi K2.5 is positioned as the strongest open-source model currently available. It was trained with continued pretraining over approximately 15 trillion (15T) mixed visual and text tokens, achieving a deep, unified understanding of both modalities.

Breakthrough 1: Agent Swarm — From Single-Agent to Coordinated Parallelism

The most compelling innovation in Kimi K2.5 is its Agent Swarm paradigm, a significant shift from the limitations of sequential, single-agent execution.

- Parallel Execution at Scale: Kimi K2.5 can autonomously orchestrate an agent swarm of up to 100 sub-agents, executing parallel workflows across up to 1,500 tool calls within a single task.

- PARL Technology: This capability is powered by Parallel-Agent Reinforcement Learning (PARL), which trains a reliable orchestrator agent to decompose complex tasks into parallelizable subtasks.

- Efficiency Leap: Compared to a single-agent setup, the Agent Swarm reduces execution time by up to 4.5x, making it ideal for large-scale research, deep browsing, and complex multi-step coding.

Breakthrough 2: Coding with Vision — The "What You See Is What You Get" Development Experience

Kimi K2.5 demonstrates state-of-the-art capabilities in the coding domain, particularly for front-end development. It excels at understanding visual inputs and translating them into structured, executable code.

- Visual-to-Code Generation: Users can provide UI screenshots, design images, or even short videos, and Kimi K2.5 will convert them into functional code, supporting interactive layouts and rich animations.

- Autonomous Visual Debugging: The model can visually inspect its own output and iterate autonomously, using visual inputs and documentation lookup to refine the code—a breakthrough in self-correction.

- Complex Visual Reasoning: Its native multimodal training allows it to tackle complex problems that require both visual reasoning and coding, such as finding the shortest path in a complex maze image using algorithms like BFS.

Breakthrough 3: Native Multimodal Intelligence — Unified Vision and Language

Unlike models that patch vision and language together, Kimi K2.5 is built on a unified architecture, eliminating the gap between perception and reasoning.

- Unified Architecture: Jointly trained on 15T mixed tokens, allowing the model to process text, images, and video simultaneously without losing context between modalities.

- Deep Visual Reasoning: Enables the model to perform complex tasks like visual pathfinding and document layout analysis with the same precision as text-based logic.

- Benchmark Leadership: Achieves state-of-the-art results on multimodal benchmarks, including 78.5% on MMMU Pro and 86.6% on VideoMMMU, outperforming many closed-source rivals.

Breakthrough 4: Enterprise-Grade Office Productivity

Kimi K2.5 seamlessly integrates agentic intelligence into real-world knowledge work, moving beyond simple Q&A to deliver expert-level, end-to-end outputs.

- High-Density Input Handling: It can reason over large, high-density inputs, such as complex financial models or long academic papers.

- Diverse Professional Outputs: The K2.5 Agent can coordinate multi-step tool use to deliver professional outputs like documents, spreadsheets (with Pivot Tables), PDFs (with LaTeX equations), and slide decks—all directly through conversation.

- Scaling to Long-Form Content: It supports long-form outputs, such as 10,000-word papers or 100-page documents, significantly accelerating tasks that previously took days.

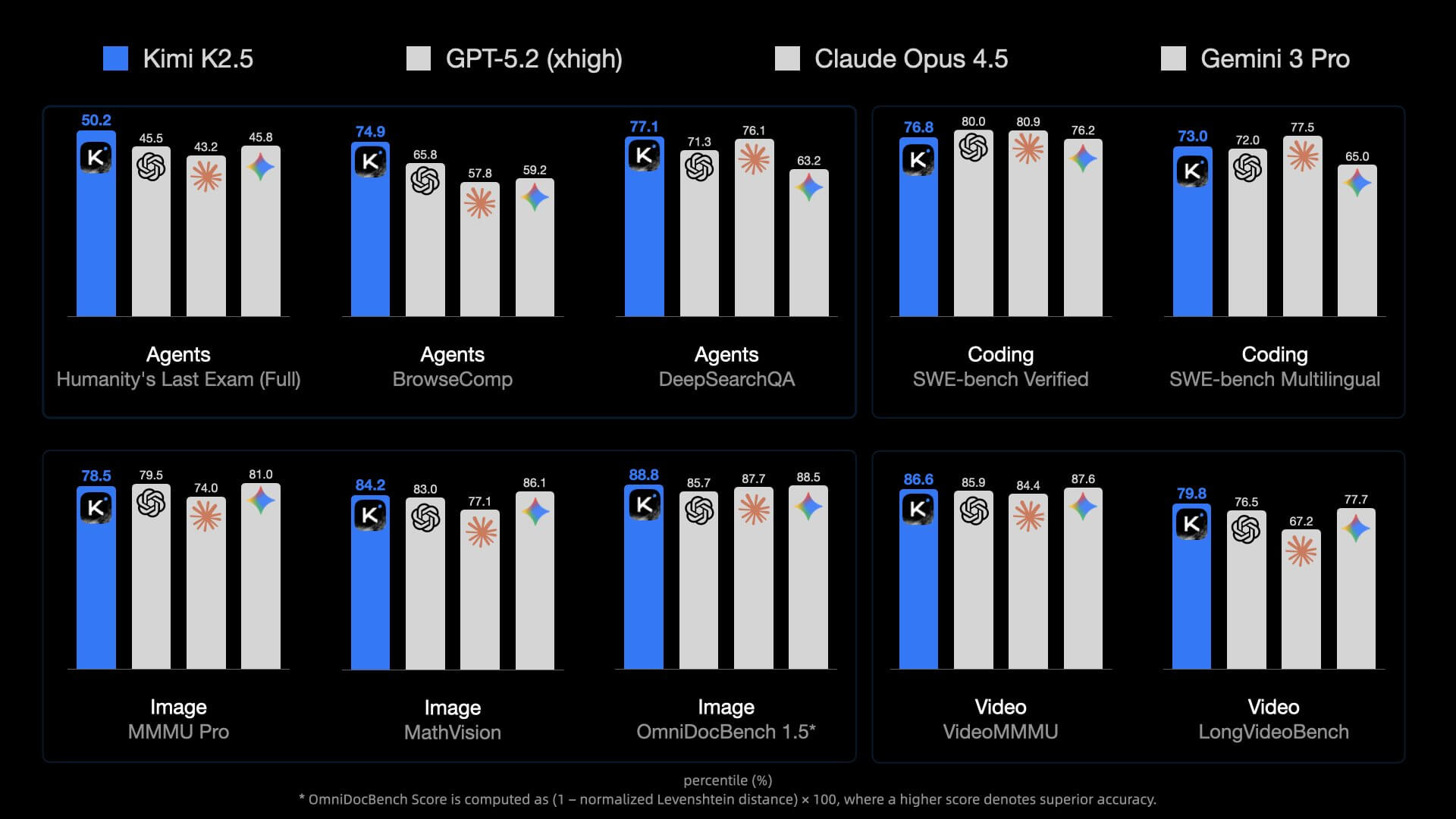

Benchmark Performance: Leading the Open-Source Frontier

Kimi K2.5 has demonstrated competitive performance against top closed-source models (such as GPT-5.2 and Claude 4.5) across numerous industry benchmarks:

- Agentic Benchmarks: Strong results on HLE (50.2% w/ tools) and BrowseComp (74.9% w/ context management).

- Vision & Video: Achieved 78.5% on MMMU Pro and 86.6% on VideoMMMU, showcasing superior multimodal understanding.

- Coding: Scored 76.8% on SWE-bench Verified, confirming its strength in real-world software engineering tasks.

Impact and Future Applications

Kimi K2.5's unique combination of visual intelligence and parallel agent execution is set to have a profound impact across several key sectors, moving AI from a helpful tool to an autonomous partner.

- Software Engineering: The Coding with Vision capability enables rapid prototyping from design mockups and facilitates autonomous visual debugging, significantly lowering the barrier for front-end development.

- Enterprise Productivity: The Agent Swarm architecture automates large-scale research and complex multi-step workflows, reducing weeks of manual data synthesis into hours of parallel execution.

- AGI Advancement: As a high-performance open-source model, Kimi K2.5 provides a scalable blueprint for coordinated autonomous systems, accelerating the global research community's progress toward Artificial General Intelligence.

Comparison

The benchmark results shown above are officially released by the Kimi team and compare Kimi K2.5 with leading closed-source models, including GPT-5.2 (xhigh), Claude Opus 4.5 and Gemini 3 Pro, across agentic reasoning, coding, image understanding, and video benchmarks. All scores are reported as percentiles, where higher values indicate stronger performance.

According to the official results, Kimi K2.5 demonstrates particularly strong performance on agentic benchmarks such as Humanity’s Last Exam (Full) and BrowseComp, while remaining competitive on coding tasks (SWE-bench Verified and Multilingual) and multimodal benchmarks including MMMU Pro, OmniDocBench, VideoMMMU, and LongVideoBench. Overall, the comparison highlights Kimi K2.5’s balanced capabilities across reasoning, coding, and multimodal understanding within an open-source framework.

Conclusion

The release of Kimi K2.5 marks a pivotal moment for open-source AI, representing a significant leap from conversational assistants to autonomous execution agents. With its unique Agent Swarm architecture and exceptional Visual-to-Code capabilities, Kimi K2.5 is accelerating the path toward AGI by tackling real-world constraints and complex, long-horizon tasks.

Whether you are a professional seeking to automate complex workflows or a developer pushing the boundaries of AI applications, Kimi K2.5 offers a powerful, flexible, and open platform for innovation.